The studies show that parallel programs incur substantially higher miss ratios and bus utilization than comparable uniprocessor programs. The analysis determined the effect of shared memory accesses on cache miss ratio and bus utilization by focusing on the sharing component of these metrics. The cache and bus behavior of parallel programs running under write-invalidate protocols was evaluated over various block and cache sizes. Block size was crucial for modeling write-invalidate, because the pattern of memory references within a block determines protocol performance. Successive refinements, incorporating architecture-dependent parameters, most importantly cache block size, produced acceptable predictions for write-invalidate. Architecturally detailed simulations validated the model for write-broadcast. The model was used to predict the relative coherency overhead of write-invalidate and write-broadcast protocols. An architecture-independent model of write sharing was developed, based on the inter-processor activity to write-shared data. Whether a program exhibits sequential of fine-grain sharing affects several factors relating to multiprocessor performance: the accuracy of sharing models that predict cache coherency overhead, the cache miss ratio and bus utilization of parallel programs, and more » the choice of coherency protocol.

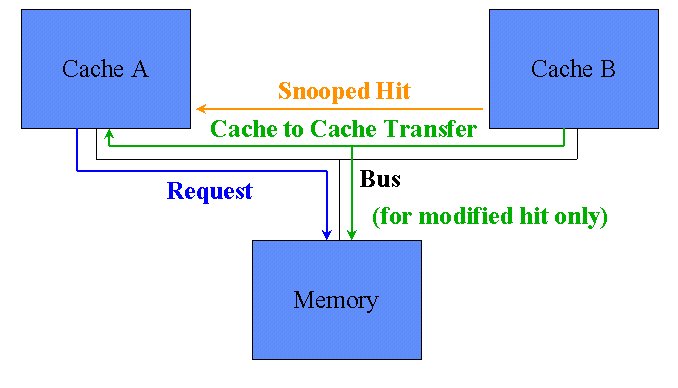

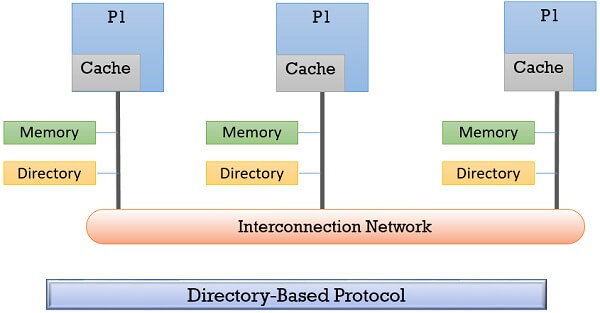

Under fine-grain sharing, processors contend for these words, and the number of per-processor sequential writes is low. In sequential sharing, a processor makes multiple, sequential writes to the words within a block, uninterrupted by accesses from other processors. The study reveals two distinct modes of sharing behavior. This dissertation examines shared memory reference patterns in parallel programs that run on bus-based, shared memory multiprocessors. Previous definitions of cache coherence are shown to be inadequate and a new definition is = , Previous protocols for general interconnection networks are shown to contain flaws and to be costly to implement and a new class of protocols is presented. The simulation model and parameters are described in detail. In each category, a new protocol is presented with better performance than previous schemes, based on simulation results. Previously proposed shared-bus protocols are described using uniform terminology, and they are shown to divide into two categories invalidation and distributed write. Protocols for shared-bus systems are shown to be an interesting special case. This dissertation explores possible solutions to the cache-coherence problem and identifies cache-coherence protocols - solutions implemented entirely in hardware - as an attractive alternative. The cache coherence problem is keeping all cached copies of the same memory location identical.

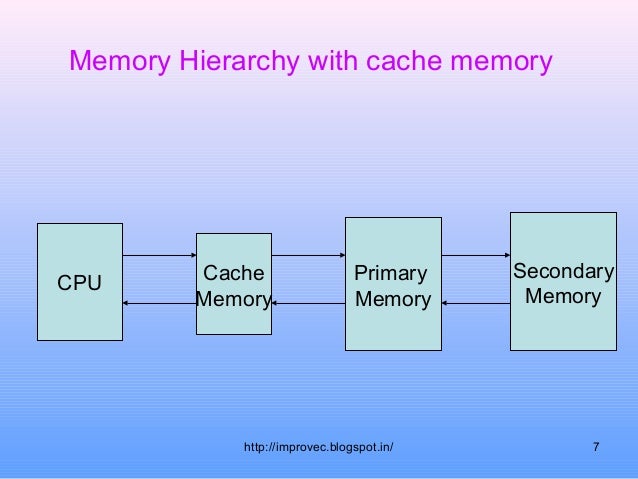

Adding a cache memory for each processor reduces the average access time, but it creates the possibility of inconsistency among cached copies. However, sharing memory between processors leads to contention that delays memory accesses.

Shared-memory multiprocessors offer increased computational power and the programmability of the shared-memory model.

0 kommentar(er)

0 kommentar(er)